SparseSoftmaxCrossEntropyWithLogits

tensorflow C++ API

tensorflow::ops::SparseSoftmaxCrossEntropyWithLogits

Computes softmax cross entropy cost and gradients to backpropagate.

Summary

UnlikeSoftmaxCrossEntropyWithLogits, this operation does not accept a matrix of label probabilities, but rather a single label per row of features. This label is considered to have probability 1.0 for the given row.

Inputs are the logits, not probabilities.

Arguments:

- scope: A Scope object

- features: batch_size x num_classes matrix

- labels: batch_size vector with values in [0, num_classes). This is the label for the given minibatch entry.

Returns:

Outputloss: Per example loss (batch_size vector).Outputbackprop: backpropagated gradients (batch_size x num_classes matrix).

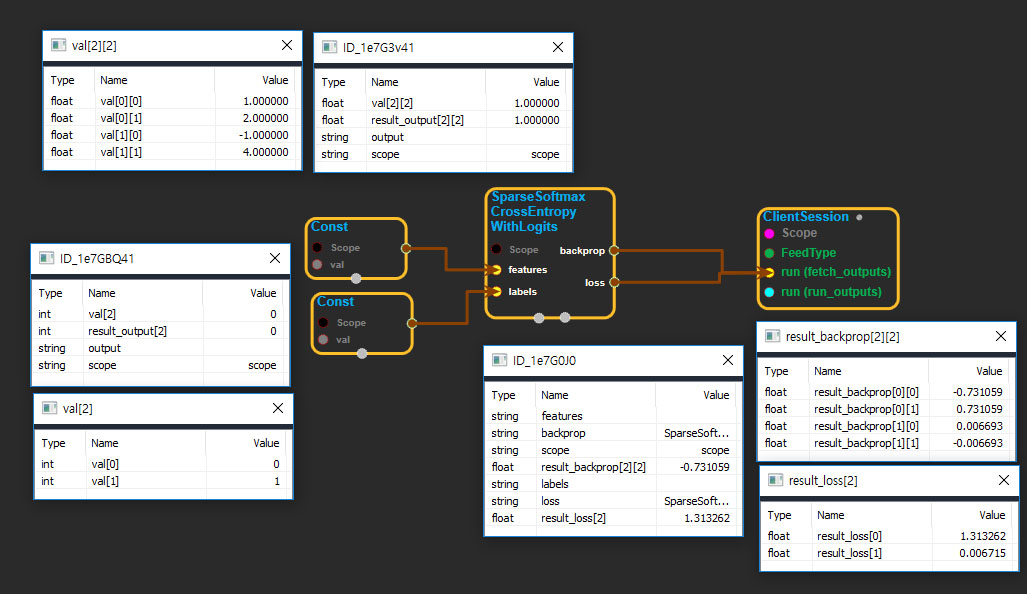

SparseSoftmaxCrossEntropyWithLogits block

Source link : https://github.com/EXPNUNI/enuSpaceTensorflow/blob/master/enuSpaceTensorflow/tf_nn.cpp

Argument:

- Scope scope : A Scope object (A scope is generated automatically each page. A scope is not connected.)

- Input features: connect Input node.

- Input labels: connect Input node.

Return:

- Output loss: Output object of SparseSoftmaxCrossEntropyWithLogits class object.

- Output backprop: Output object of SparseSoftmaxCrossEntropyWithLogits class object.

Result:

- std::vector(Tensor) result_loss: Returned object of executed result by calling session.

- std::vector(Tensor) result_backprop: Returned object of executed result by calling session.

Using Method