ApplyRMSProp

tensorflow C++ API

Update '*var' according to the RMSProp algorithm.

Summary

Note that in dense implementation of this algorithm, ms and mom will update even if the grad is zero, but in this sparse implementation, ms and mom will not update in iterations during which the grad is zero.

mean_square = decay * mean_square + (1-decay) * gradient ** 2 Delta = learning_rate * gradient / sqrt(mean_square + epsilon)

ms <- rho * ms_{t-1} + (1-rho) * grad * grad mom <- momentum * mom_{t-1} + lr * grad / sqrt(ms + epsilon) var <- var - mom

Arguments:

- scope: A Scope object

- var: Should be from a Variable().

- ms: Should be from a Variable().

- mom: Should be from a Variable().

- lr: Scaling factor. Must be a scalar.

- rho: Decay rate. Must be a scalar.

- epsilon: Ridge term. Must be a scalar.

- grad: The gradient.

Optional attributes (seeAttrs):

- use_locking: If

True, updating of the var, ms, and mom tensors is protected by a lock; otherwise the behavior is undefined, but may exhibit less contention.

Returns:

Output: Same as "var".

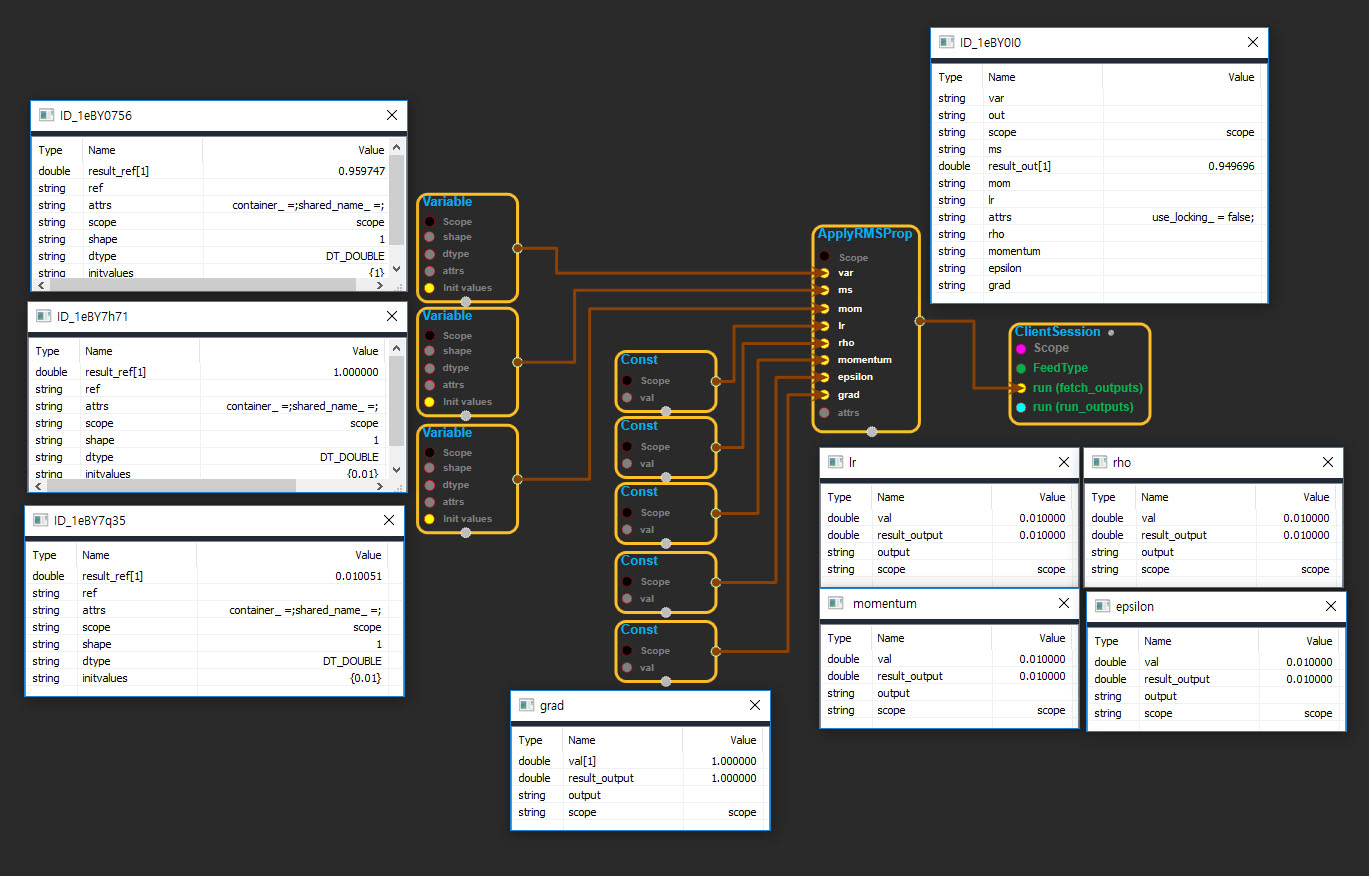

ApplyRMSProp block

Source link : https://github.com/EXPNUNI/enuSpaceTensorflow/blob/master/enuSpaceTensorflow/tf_training.cpp

Argument:

- Scope scope : A Scope object (A scope is generated automatically each page. A scope is not connected.)

- Input var: connect Input node.

- Input ms: connect Input node.

- Input mom: connect Input node.

- Input lr: connect Input node.

- Input rho: connect Input node.

- Input momentum: connect Input node.

- Input epsilon: connect Input node.

- Input grad: connect Input node.

- ApplyRMSProp ::Attrs attrs : Input attrs in value. ex) use_locking_ = false;

Return:

- Output output : Output object of ApplyRMSProp class object.

Result:

- std::vector(Tensor) result_output : Returned object of executed result by calling session.

Using Method